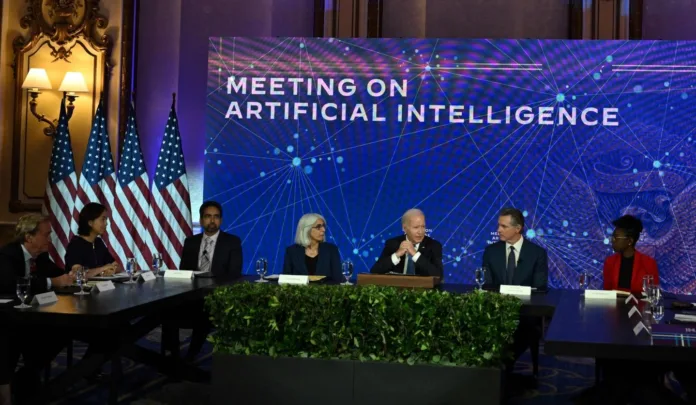

For safeguarding AI, the White House has launched an initiative to address the potential hazards posed by artificial intelligence (AI) technology by bringing together seven prominent technology companies, including Amazon, Google, Microsoft, and OpenAI. The corporations voluntarily agreed to a set of agreements aimed at protecting consumers and encouraging responsible AI development. These commitments include investments in cybersecurity, discrimination research, and a watermarking technique to identify AI-generated content. While these measures are voluntary, the White House is also working on an executive order to address artificial intelligence concerns across federal agencies and departments. This article delves into the major commitments made by the tech titans, the context for the White House’s actions, and the ramifications of this project for AI legislation.

Table of Contents

The Key Commitments by Top Tech Giants For Safeguarding AI

Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI have voluntarily agreed to a number of measures to support responsible AI development:

Cybersecurity investments: Companies will commit money to ensure the security of their AI products and systems, protecting against dangers such as cyberattacks and biosecurity concerns.

Also Read: OpenAI Launches GPT-4 API for Advanced Text Generation: What You Need to Know

Prejudice Research: Research programs will focus on detecting and correcting prejudice and biases that may be built into AI systems, ensuring that AI applications are fair and egalitarian.

Watermarking System: A new watermarking system will be introduced to notify consumers when content is generated by AI, improving transparency and accountability in AI-driven media.

Independent Testing: AI models will be tested for safety by third-party specialists in order to detect and reduce possible risks connected with AI systems.

Reporting flaws: To address potential security and ethical problems, businesses will implement ways for reporting flaws in their AI technology.

Openness and Public Reporting: To promote openness and responsible use, businesses will openly reveal defects, hazards, and biases in their AI systems.

Collaborative Sharing: Companies and the government will exchange trust and safety information in order to handle AI-related concerns together.

Using AI for Societal Benefit: The organizations will use advanced AI systems known as frontier models to address major societal issues.

Context Behind the White House’s Actions For Safeguarding AI

The White House’s commitment to AI protections comes after discussions with technology executives, labor leaders, and civil rights organizations about AI concerns. The administration pledged extra money and policy direction for AI development and research in May. This includes $140 million for the establishment of seven National AI Research Institutes, as well as collaboration from industry titans such as Google, Microsoft, Nvidia, and OpenAI for public review of their language models. Furthermore, Senate Majority Leader Chuck Schumer introduced the SAFE Framework, which advocates for legislative legislation to address the possible effects of AI on national security, employment loss, and misinformation.

Implications for AI Regulation

The voluntary promises made by prominent technology corporations are an emergency solution to mitigate AI dangers while long-term initiatives for comprehensive legislation are explored. However, some critics contend that without accountability measures, voluntary promises may not ensure responsible AI development. Senator Schumer’s AI regulatory initiative is part of a bipartisan effort to investigate AI governance. While the program is making headway, additional tougher measures may be required to establish successful AI legislation that takes both innovation and public safety into account.

Conclusion

The White House’s engagement with leading industry titans to protect AI is a huge step towards responsible AI development. These companies’ voluntary agreements reflect a commitment to tackling AI dangers and fostering transparency in AI technologies. The White House’s actions underscore the growing significance of striking a balance between innovation and preserving the safety and rights of AI users as the AI landscape quickly advances. While voluntary precautions are a short-term solution, the campaign for congressional regulation will influence the future of AI governance, ultimately producing a more secure and ethical AI world.

Disclaimer:

AI was used to conduct research and help write parts of the article. We primarily use the Gemini model developed by Google AI. While AI-assisted in creating this content, it was reviewed and edited by a human editor to ensure accuracy, clarity, and adherence to Google's webmaster guidelines.